Gradient Descent With Momentum

Exponentially weighted moving average

V(t) = beta * V(t-1) + (1 - beta) * theta(t)with beta as the constant, theta(t) as the value at time t.

It is as if we compute the moving average of 1 / (1 - beta) days. Because the older weight decays to less than e beyond those days.

Why don't we use the original moving average formula? This formula is more efficient in terms of memory and computation.

Bias correction in exponentially weighted moving average

Since initially V(0) = 0, on the first few iteration, the moving average result wouldn't look like a true moving average. It is because V(1) = (1 - beta) * theta(1), instead of theta(1). Thus, a bias correction is done such that the result represents the moving average more. It is done by multiplying V(t) with 1 - beta^t.

Gradient descent with momentum

This method almost always work faster than the standard gradient descent. In one sentence, the basic idea is to compute an exponentially weighted average of your gradients, and then use that gradient to update your weights instead.

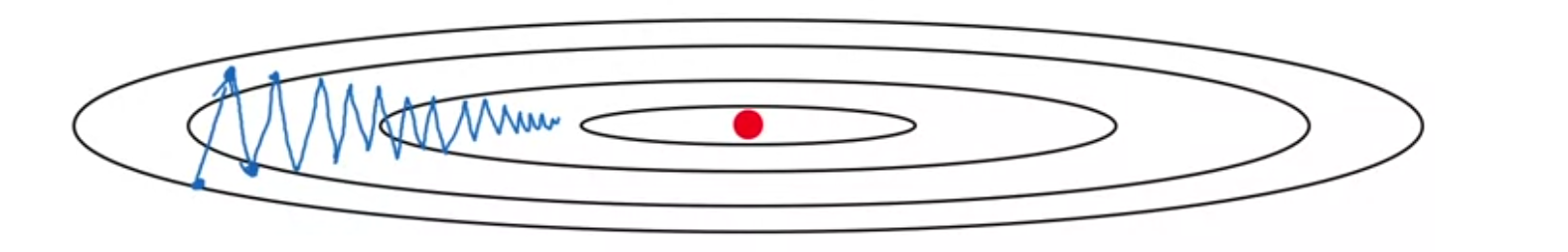

Maybe you start gradient descent here and if you take one iteration of gradient descent either or descent maybe end up heading there. But now you're on the other side of this ellipse, and if you take another step of gradient descent maybe you end up doing that. And then another step, another step, and so on. And you see that gradient descents will sort of take a lot of steps slowly oscillate toward the minimum. And this up and down oscillations slows down gradient descent and prevents you from using a much larger learning rate. In particular, if you were to use a much larger learning rate you might end up over shooting and end up diverging like so. And so the need to prevent the oscillations from getting too big forces you to use a learning rate that's not itself too large. Another way of viewing this problem is that on the vertical axis you want your learning to be a bit slower, because you don't want those oscillations. But on the horizontal axis, you want faster learning.

Implementation Details

vdw = 0, vdb = 0On each iteration t:Compute dW, db on the mini batchvdw = beta * vdw + (1 - beta) * dWvdb = beta * vdb + (1 - beta) * dbW = W - alpha * vdwb = b - alpha * vdb